Mobile App A/B Testing: 5 Mistakes to Avoid

As marketers, we need to constantly be on our toes. It’s the nature of the role, specifically in the mobile growth realm. This ever-changing ecosystem forces us to work under tight deadlines, constantly readjusting our strategy and reassessing our operational methods. But in this rush for numbers and results, it’s pivotal nothing will get left behind, and no corners will be cut.

After analyzing thousands of app store creative tests, we’ve seen what works and what doesn’t, why some companies are successful and where others fall short. Whether it’s the rush to get results or something else, this list of 5 mistakes to avoid when going through the app store testing process will help you to enjoy better CVR and engagement with your users, while not throwing hard-earned dollars down the drain.

This is a guest post written by Jonathan Fishman, Director of Marketing at StoreMaven.

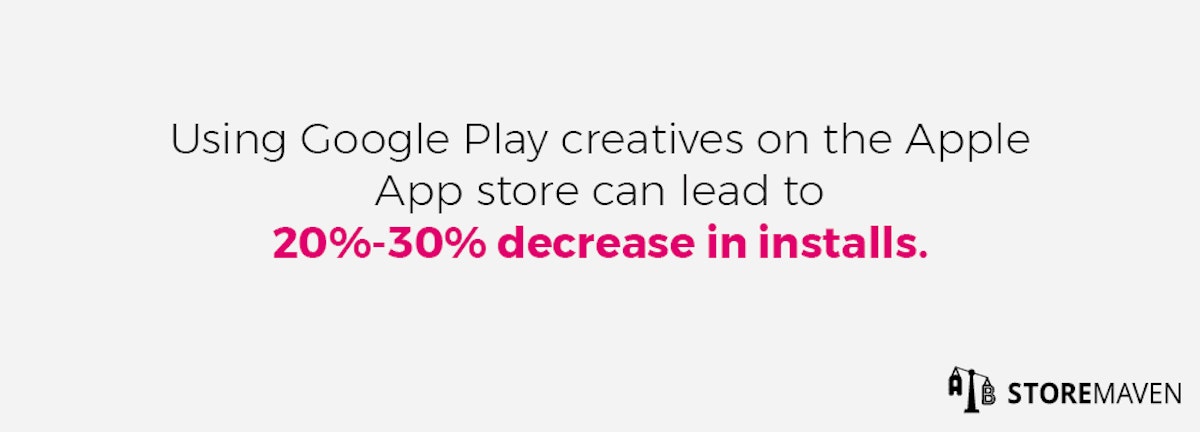

1. Do not test same creatives for Google Play and the App Store

The App Store and Google Play are fundamentally different platforms, and they should be treated as such.

It’s not only the design itself or the layout differences, but it also has many more implications: different traffic is being driven for each store. The user base is different; apps and games that are popular on one platform could very well not enjoy the same success on the other.

Due to these significant differences between the two stores, it’s crucial to avoid a one-size-fits-all approach. A quick glance at the following data will show the consequences of making this mistake.

2. Never A/B test without a strategy

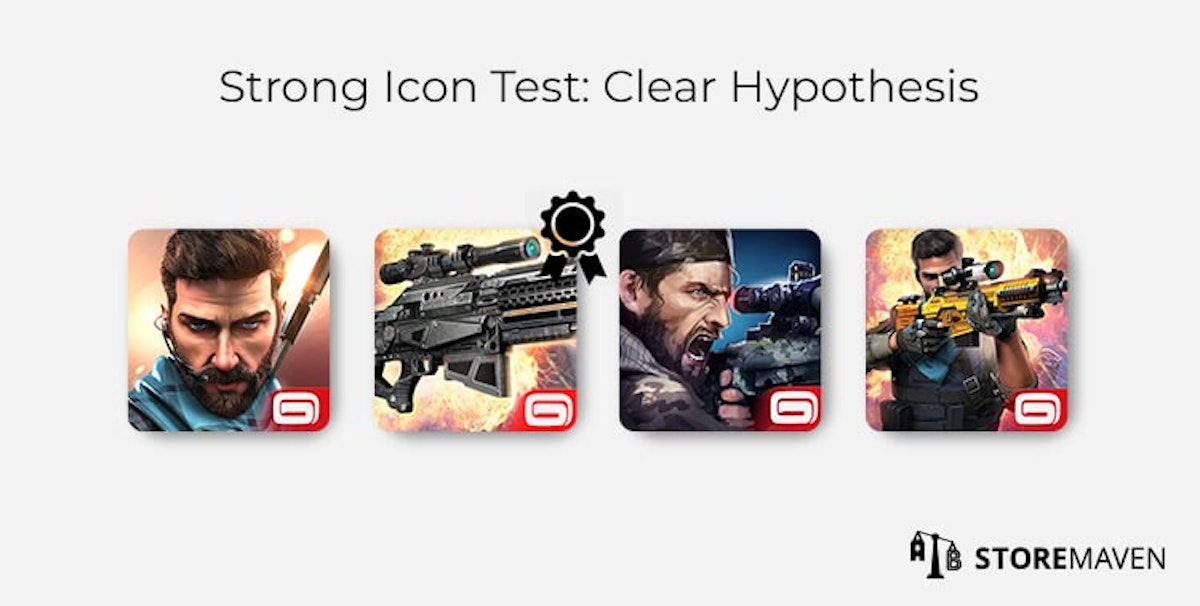

We, as marketers, are always under pressure to show numbers, results, and better conversion rates. But there’s one stage in this testing cycle that just can’t be rushed. Conducting proper research and developing strong hypotheses to test with is crucial for your success in A/B testing. Choosing weak hypotheses or too minor and making subtle changes to your app creatives won’t end up making an impact. It can contrarily harm your efforts, as no valuable insights and no clear direction or hypothesis are driving the test cycle forward.

The following is a good example of a strong hypothesis: running an app icon test in which the variations tested different aspects: character, gameplay element, and a combination of character and gameplay elements. This way, no matter the winner, it will have a clear insight into what your audience reacts better.

3. Ending & concluding tests early is a bad idea

Many failed tests are also the end-result of that pressure to show numbers quickly. In this case, you may have a good strategy, but the test itself was closed too early.

It’s more than a statistical aspect. For example, let’s say a mobile game’s highest quality audience is more active on the weekdays than on the weekends. If a test is starting mid-week and closes after only four days in which traffic was received, no doubt the results won’t be accurate. Any conclusion or resolution that will be driven from these results will miss your goals in the long run, as well as damage future tests cycles.

Additionally, it’s rare to see a variation that is the clear winner on every single day of the test. You should always aspire to have a wider date range to give you the confidence of consistency. From our data, gathered from more than 5M users, we recommend running tests for a minimum of 7 days and to push for a longer average of 10-14 days.

4. Avoid measuring results in an invalid way

Bear with us for this one, or go bring your data maven to assist. Even if your test is being run flawlessly, it’s easy to make the mistake of measuring results using a model that’s too simplistic.

Some companies, for instance, use pre and post-test analyses that calculate the average CVR two weeks before the change and compare it to the average CVR after the change. This approach, more common than one would think, fails to take into account many variables that affect conversion and app units in the store, such as:

- keyword ranking

- overall ranking

- category ranking

- being featured

- level of user acquisition (UA) spending

- competitors’ changes (creative updates, UA spending, etc.)

- and more

Without accurately measuring the impact, companies can arrive at the wrong conclusions – such as creative changes don’t matter, or that conversion rates were hurt when, in reality, they experienced a positive uplift. To know more about this, read this blog.

5. Not optimizing for the right audience can harm your tests

Whether you develop a causal game or have a functional app, you need to think about your audience. Different apps and games have very different users coming their way. When you know where your audience is coming from and what they are looking for, it’s so much easier to cater to their needs.

Let’s assume you have a casual game – mostly attracting decisive users who don’t care to spend additional time or effort researching. It’s easy to figure out you’ll need to spend your money and time optimizing your first impression frame – what these decisive users will have a chance to look at in the 3-6 seconds that they’ll glance at your page.

Another consideration is dependent on whether your game is available on other markets. If you’re targeting a variety of countries, you can localize or culturalize your creatives, which means adapting your app store assets to the language and culture of different regions.

Read these tips to successfully localize your app or game in any chosen market

TLDR

Keep these valuable tips in mind when setting up A/B tests for your app or game on the app stores:

- Google Play and the App Store are very different platforms, so do not make the mistake of testing the same creatives for both

- Conduct proper research & develop a strong hypothesis before you set up an A/B test

- Don’t be in a hurry to conclude the test, otherwise the results might not be too accurate

- It is not recommended to measure the impact of your tests with a model that’s too simplistic, otherwise it might fail to consider variables that affect conversion rate and app units

- Be clear on who your target audience is before conducting A/B tests

Marcos Barceló

Marcos Barceló

Mariia Chernoplyokova

Mariia Chernoplyokova

Alix Carman

Alix Carman

John Koetsier

John Koetsier